GPT-5

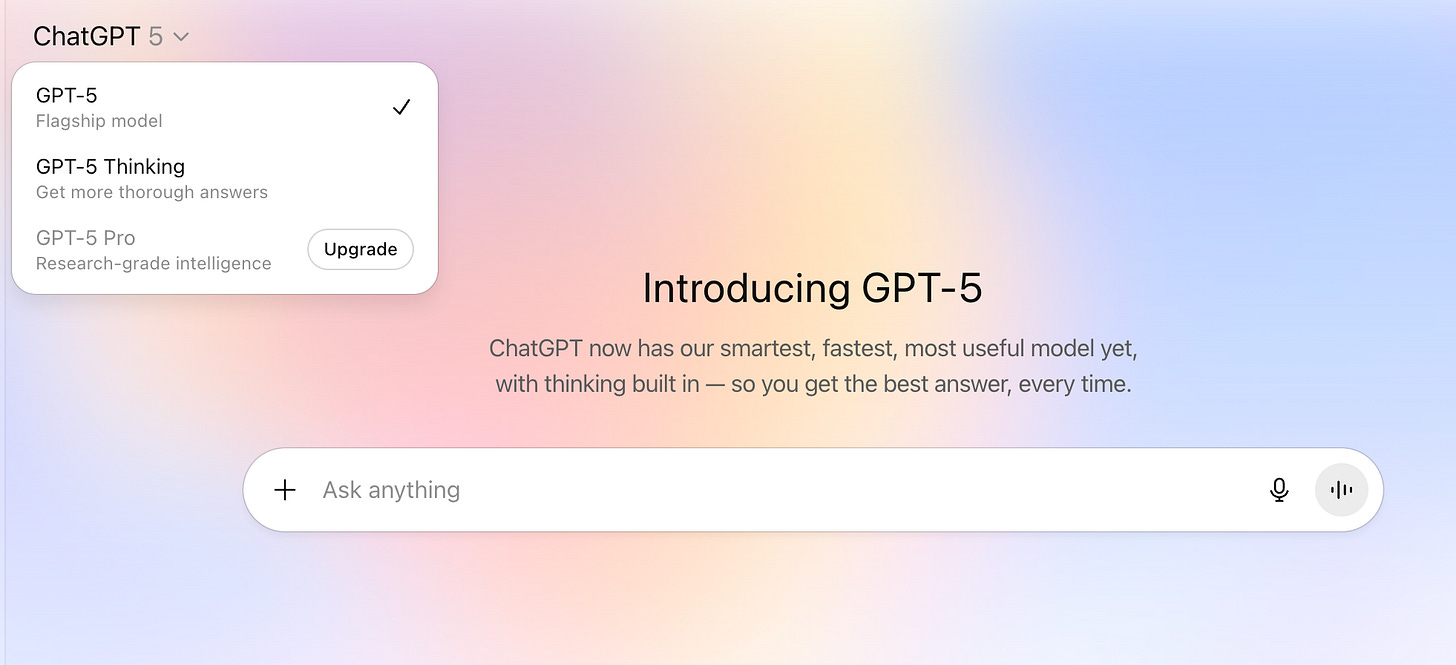

As expected, Open AI announced GPT-5 on Aug 7. They highlighted how it’s better at coding, creative writing, and answering health questions. It also now includes a “real-time router” to pick the right AI model to answer the questions, so users don’t need to manually select a specific model. Many users didn’t even know about the more advanced thinking models, so they’ll now see more sophisticated answers for the first time. There were some initial hiccups in that rollout that apparently have been fixed, although I still get two options in my dropdown:

This is still much less confusing than their previous zoo of options such as 4o, 4.1, 4.5, o3, o4-mini. However since some people prefer 4o, OpenAI plans to add it back as an option.

Initial thoughts

The main thing I notice when using ChatGPT with version 5 is how proactive it is. It will generally ask a follow-up question where it offers to do additional research or analysis. I find I can keep on responding “OK” and its responses continue to remain useful (to a point). Once they improve their “agent” mode, I can see people just responding “OK” without paying attention until ChatGPT has turned the entire universe into paperclips…

Coding

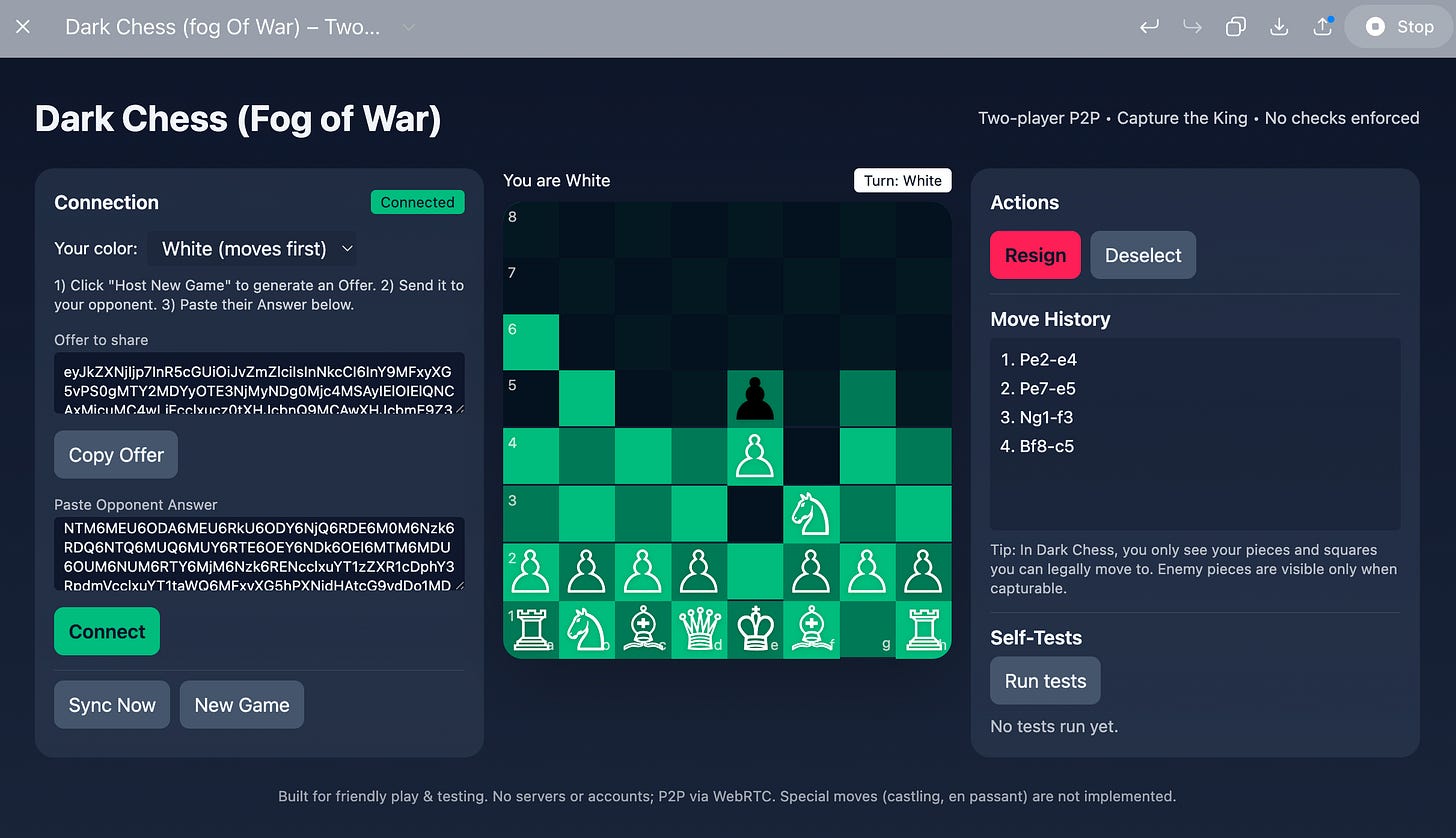

Dark Chess is a fun variant of chess that blends in a “fog of war” element where you cannot see all the opponents pieces. I had previously generated a version of this game using AI Studio’s build apps capability, and it worked reasonably well but didn’t include the ability to actually play online. However ChatGPT used WebRTC to allow for a working peer-to-peer game. It still needs a few things fixed, but it’s pretty cool for a couple prompts! Note that ChatGPT’s editor doesn’t work well for iterating on an app, so if you’re actually coding, you should use another tool and can connect it to Open AI’s API.

Health

My teeth had some issues recently and every dentist in NYC gave a different opinion about which specific teeth needed to be addressed, but they did all agree on one problematic tooth. When I uploaded my x-rays to previous versions of ChatGPT, it gave a completely wrong diagnosis. This time, it said it couldn’t tell from the first x-ray I uploaded; credit to it for admitting it doesn’t know. After I uploaded more x-rays, it correctly identified the problematic tooth. It also seems to have mistakenly identified another tooth as having an issue, but it’s hard to know who’s right at this point. The idea that it knows anything here is pretty impressive as I wouldn’t imagine it would have huge datasets of dental x-rays in its training data. It seems there’s a lot of room for improvement if the AI models are fine-tuned with large datasets in specific medical areas.